As artificial intelligence (AI) sweeps across the globe, AI servers have become the core infrastructure driving the technological revolution. From the training and inference of large language models to real-time decision-making in autonomous driving, from scientific simulations to intelligent healthcare, these emerging computing-intensive tasks rely on GPU clusters and heterogeneous computing architectures of an unprecedented scale. Yet, behind this edifice of intelligence built by chips and algorithms, a frequently overlooked component plays a decisive role—the AI server power supply.

As the "energy foundation" supporting the stable operation of computing operations, AI server power supplies have surpassed traditional power equipment in importance, becoming a critical point and technological high ground determining the development of the AI industry.

01

"The Strong Heart of the Computing Revolution"

AI server power supplies are not merely energy providers, but the bottleneck determining whether high-density computing power can be delivered safely, stably, and efficiently. Compared to traditional servers, AI server power supplies must contend with three major challenges: kilowatt-level power density, instantaneous current spikes, and extreme energy efficiency requirements. Their reliability directly impacts computing assets valuing millions of dollars and critical research and development processes. A millisecond-level power interruption could disrupt models trained for weeks or even cause permanent hardware damage. Meanwhile, even marginal improvements in energy efficiency can translate into significant operational cost savings and a smaller carbon footprint.

Therefore, AI server power supplies have transcended the category of traditional components. They represent the "strong heart" of the computing revolution in the intelligent era—a pivotal force balancing performance, reliability, and sustainable development.

02

Analysis of Industry Trends

[1] Explosive Market Growth

As generative AI parameters surpass the trillion-scale, and autonomous driving training data increases at a daily rate of petabytes, the power demand of AI Data Centers (AIDC) is rapidly growing. Relevant data indicates that the market size for power supplies installed outside the cabinet is projected to reach 99.6 billion CNY by 2030 (CAGR 50%), while the global market size for server power supplies is expected to hit 147 billion CNY (CAGR 42%). Within this segment, the share of AI server power supplies is anticipated to increase from 40% in 2024 to 45% by 2030.

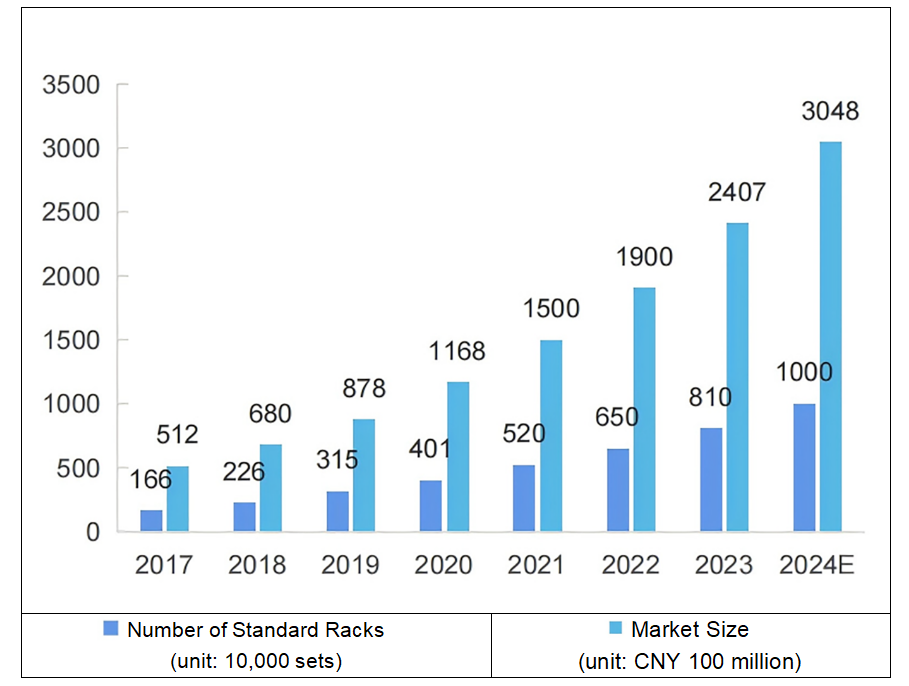

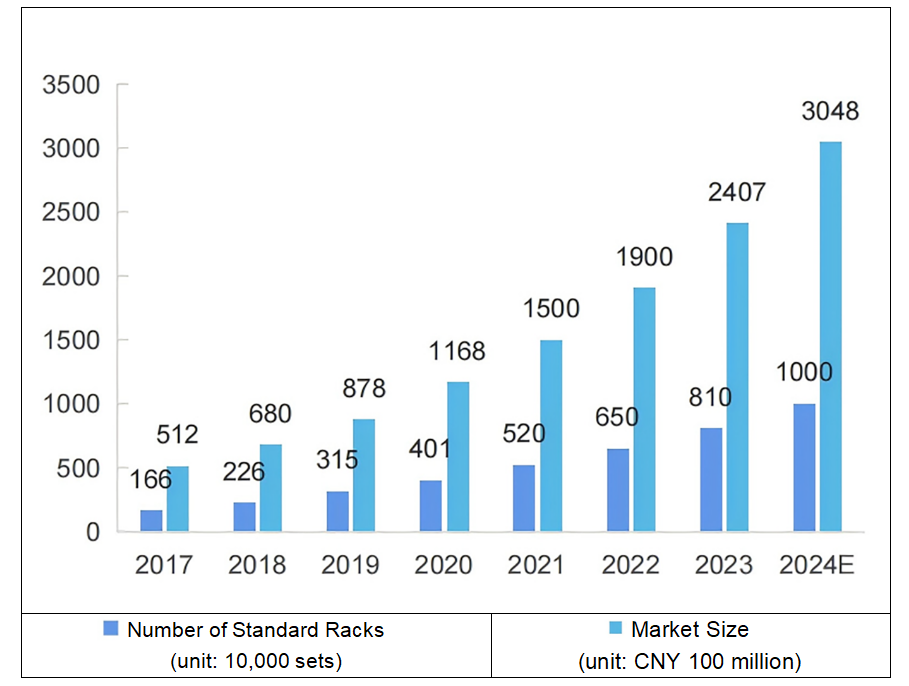

Number of Standard Racks in Operation and Market Size of Data Centers in Mainland China from 2017 to 2024

[2] Rapid Increase in Single-Chip Power Consumption & Accelerated Transformation of AIDC Power Architectures

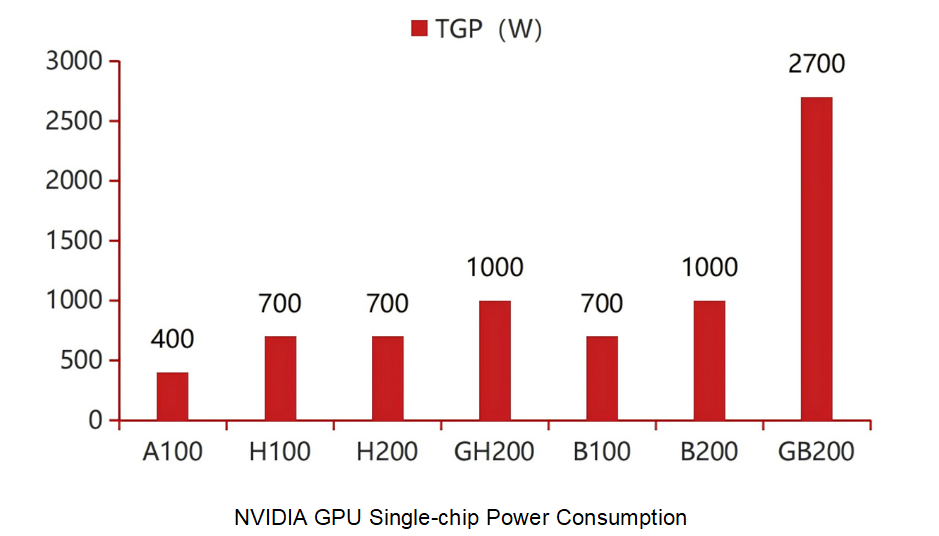

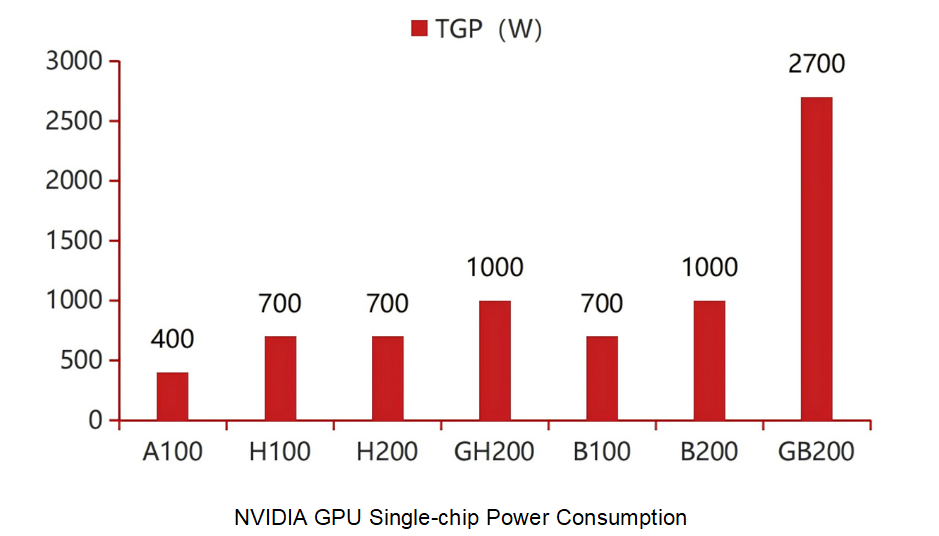

In terms of chips, NVIDIA's H100 and H200 have a single-chip power consumption of 700W, while the GB200 reaches 2700W, representing a significant increase in per-chip power consumption. Regarding server configurations, AIDCs need to deploy high-power GPU/TPU servers, with per-rack power density reaching 5~10 times that of traditional IDCs. To reduce energy conversion stages, minimize current loss, and enhance power density in data center power supply, server power systems are evolving towards high-voltage architectures.

[3] The Rise of Liquid Cooling Solutions

In the context of high-power-density cabinets, liquid cooling solutions are gaining support as they address heat dissipation requirements between racks. Liquid cooling offers superior heat dissipation capabilities while also reducing the PUE of data centers. As rack density increases to 20kW and above, various liquid cooling technologies have emerged to meet the heat dissipation demands of high-thermal-density cabinets.

Note: PUE (Power Usage Effectiveness) = Total Data Center Energy Consumption / IT Equipment Energy Consumption.

[4] Novel Components and Ecosystem Synergy

To address issues such as high energy consumption in data centers and the urgent need for increased power density, collaboration between new components and ecosystems is required. Replacing silicon-based devices with SiC/GaN can improve efficiency by 3~5%. Additionally, utilizing the Photovoltaic, Energy Storage, Direct Current, and Flexible (PEDF) system to directly couple photovoltaics and energy storage with HVDC can reduce conversion stages and enhance the utilization of green electricity.

03

Technology Development Roadmap

The development of server power supply technology follows a clear trajectory: how to power the rapidly growing computational loads with greater efficiency, reliability, and density.

[1] From Traditional AC to High-Voltage DC

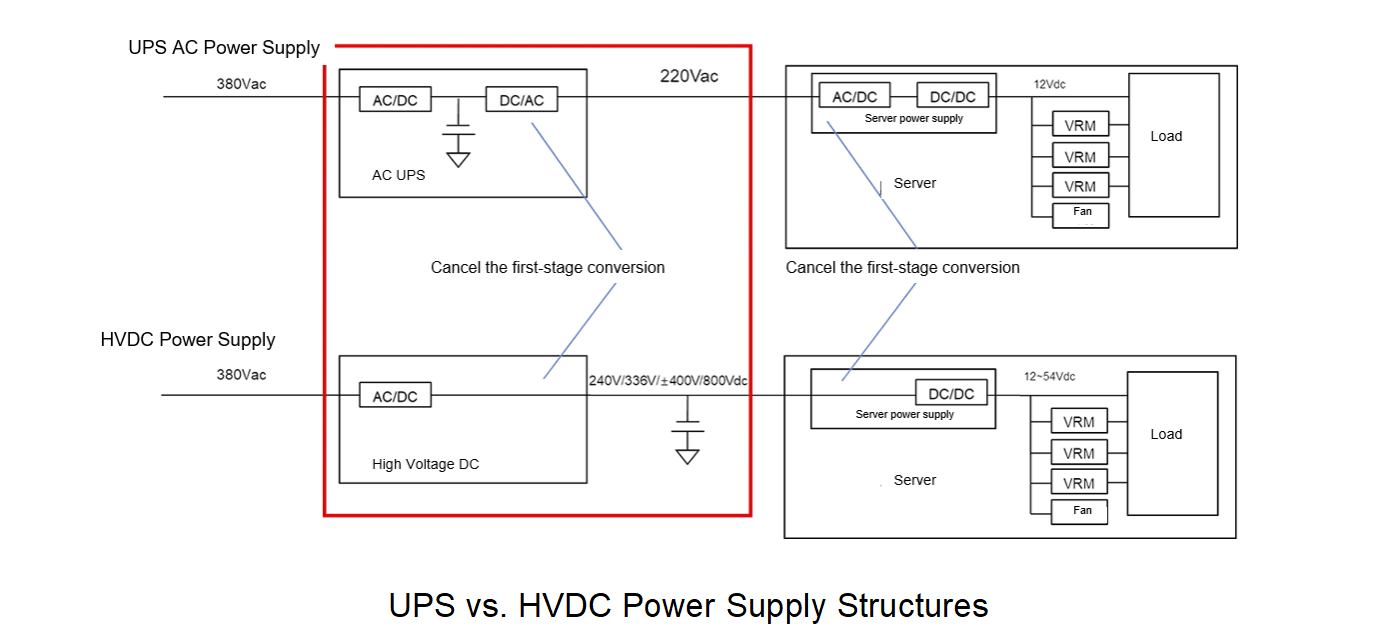

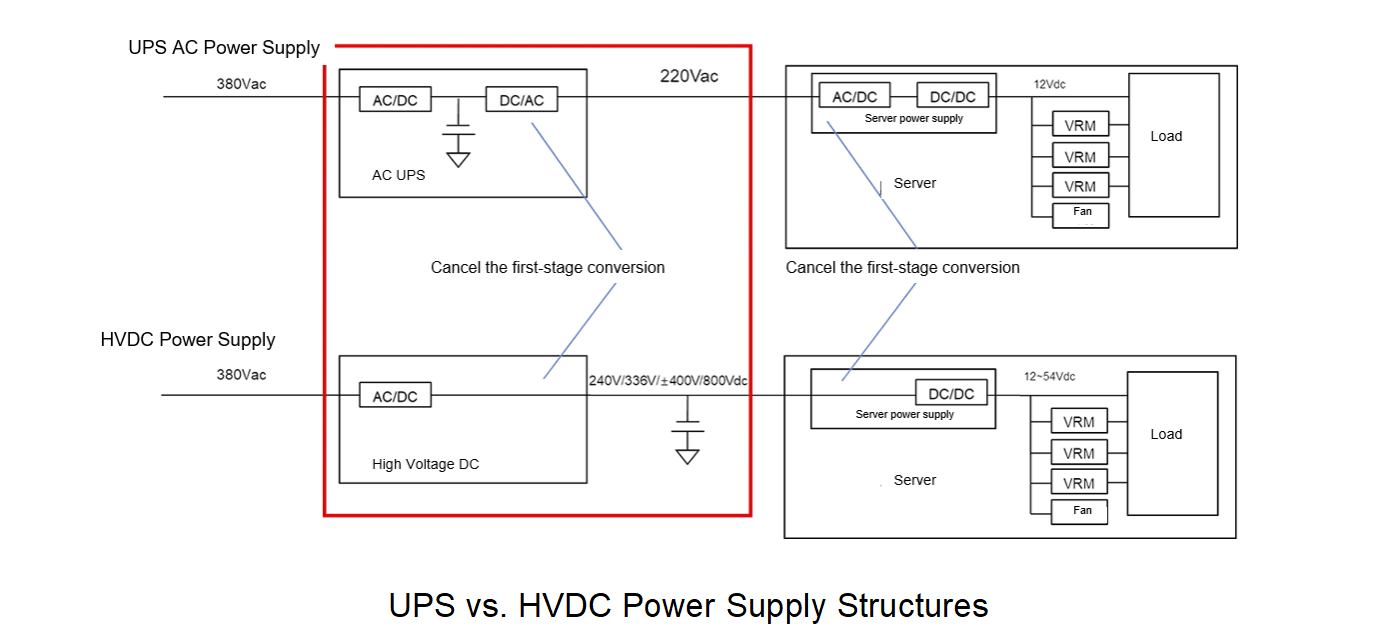

Traditional AC UPS Architecture: Utilizes Uninterruptible Power Supply (UPS) to provide power to servers. The server's internal Power Supply Unit (PSU) then converts AC to DC for components such as the motherboard and CPU. This architecture suffers from issues including multiple power conversion stages (AC->DC->AC->DC), low efficiency (typically 90~94.5%), and a large footprint, among other problems.

HVDC Architecture: Developed to enhance power supply efficiency, this solution employs centralized rectifier cabinets in the machine room to convert AC power directly into high-voltage DC (e.g., 240V/336V/±400V/800V), which is then delivered directly to server racks. The AC/DC direct supply structure significantly simplifies the system architecture, reducing failure points by over 50% while markedly reducing energy transmission losses. With typical system efficiency improved to 94~96%, this approach offers both high efficiency and reliability.

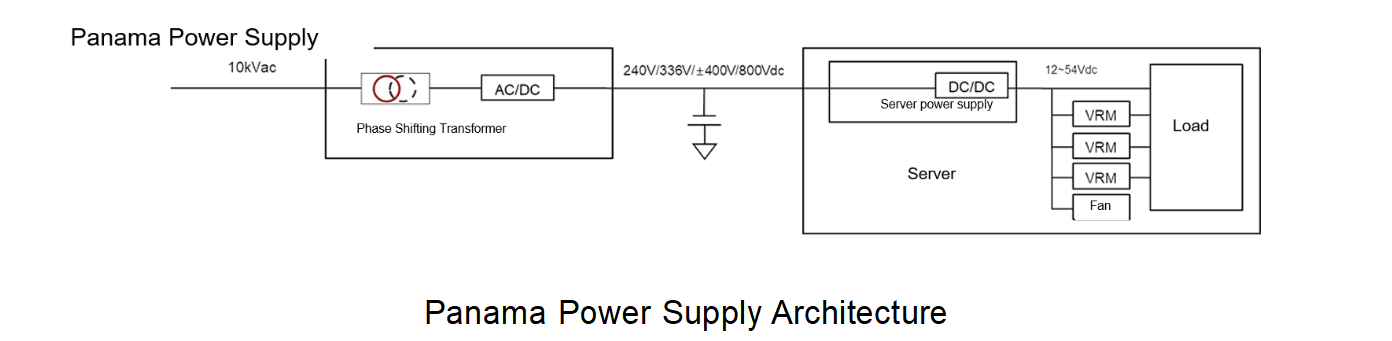

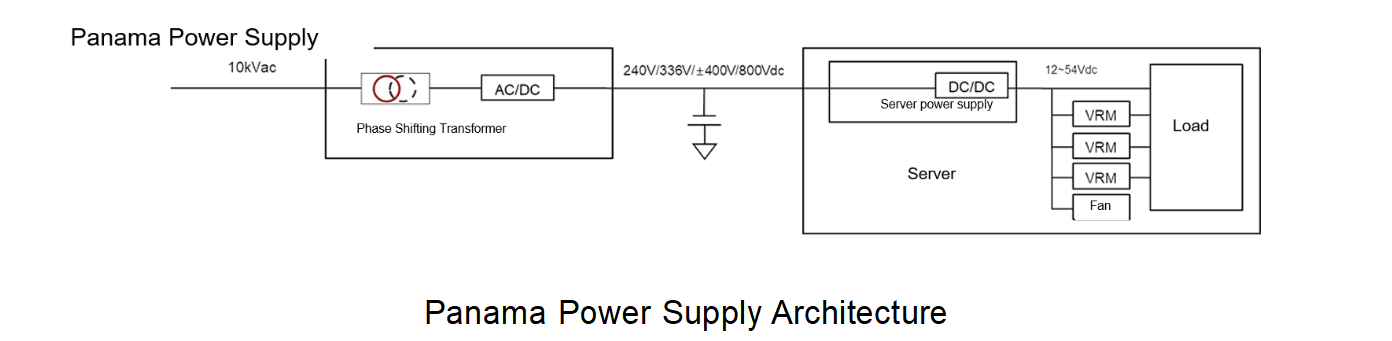

Panama Power Architecture: A further simplification of HVDC systems, this architecture replaces numerous intermediate power modules of the traditional architecture. Compared to HVDC, its most significant innovation lies in adopting phase-shifting transformers instead of traditional power frequency transformers, integrating voltage reduction and rectification. It directly converts incoming 10kV utility power into high-voltage DC (e.g., 240V/336V/±400V/800V). Currently, the implementation of Panama Power in data centers increases typical system efficiency to 97.5%.

SST (Solid-State Transformer): The fundamental working principle of SST involves first converting the input utility-frequency AC power into DC through a rectifier, then inverting the DC into high-frequency AC, followed by voltage transformation and electrical isolation via a high-frequency transformer, and finally rectifying the high-frequency AC back to DC output at the required voltage level. In contrast to the Panama Power architecture, the solid-state transformer—based on power electronics conversion technology and featuring a three-stage power conversion architecture—utilizes a high-frequency transformer to achieve electrical isolation and voltage matching, directly completing the conversion from 10kV AC to 800V DC with an efficiency of approximately 98%.

[2] Technological Innovations in PSU

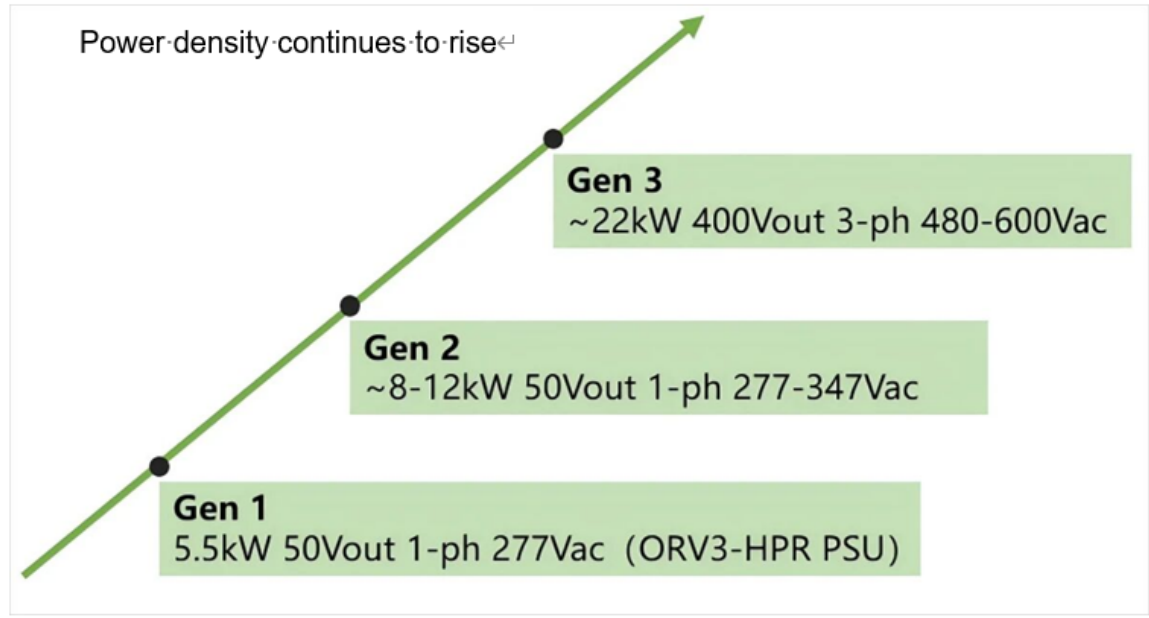

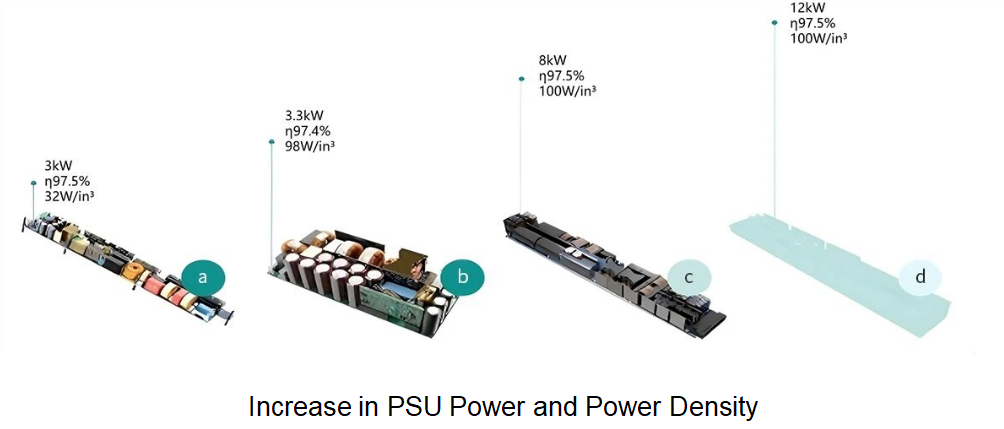

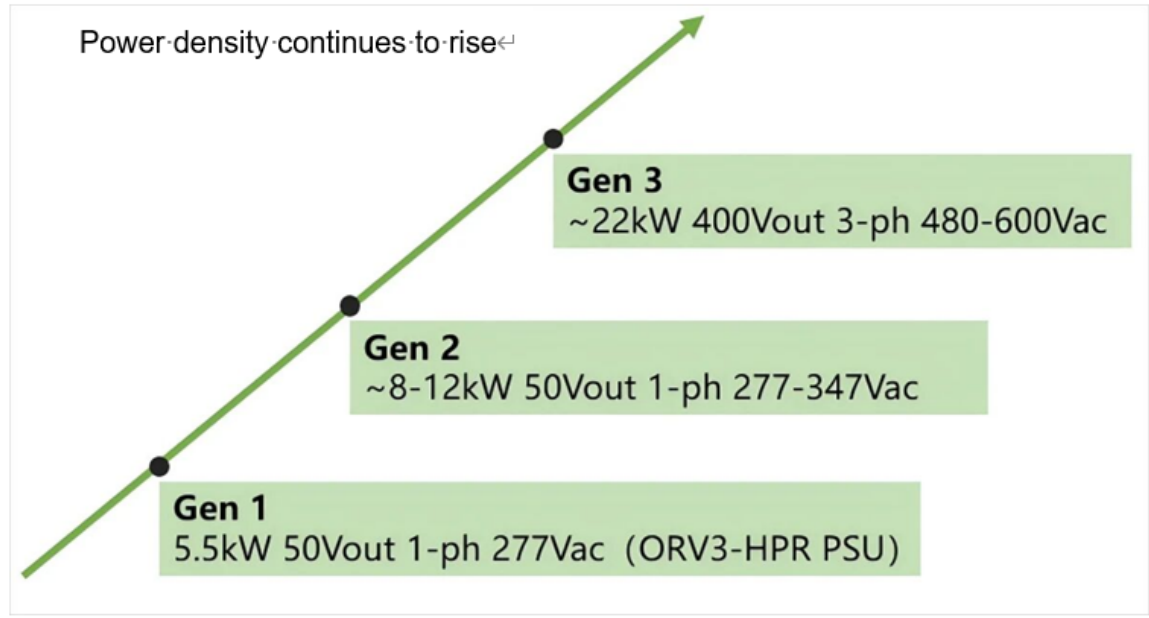

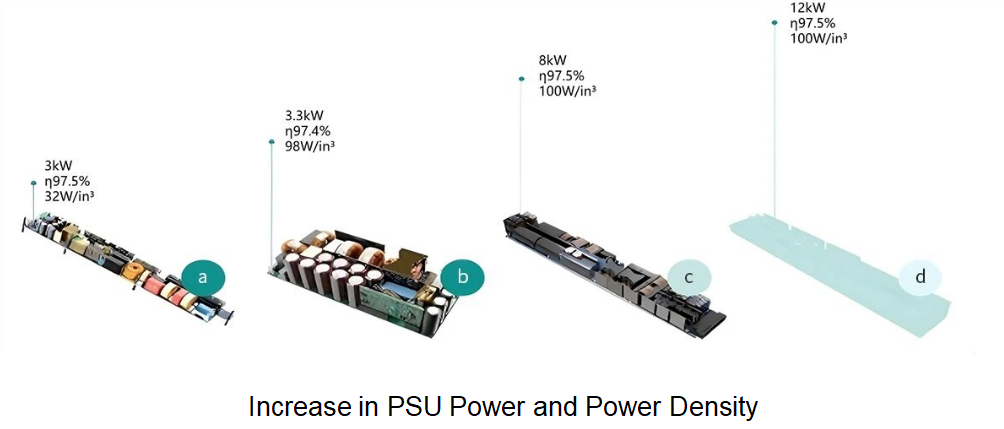

Continuous Increase in Power Density: Taking a leading manufacturer as an example, in its PSU upgrade roadmap, the PSU architecture advances from 3.3kW and 5.5kW to 12kW and 22kW power ratings. Accordingly, the power density increases from 32W/in3 to 100W/in3. This enhancement is primarily achieved by leveraging semiconductor materials such as silicon, SiC, and GaN, enabling higher power output within a limited space.

Evolving Voltage Platforms: PSU output voltages are progressively rising to meet growing computing demands. Early CRPS standards primarily used 12V, 5V, and 3.3V to accommodate the low power requirements of traditional servers. Subsequently, the 48V intermediate bus emerged, aligning with the ORv3 standard to balance efficiency and device compatibility. In May 2025, NVIDIA announced at COMPUTEX that its 800V HVDC architecture will be fully deployed by 2027 to support AI computing clusters with per-rack power exceeding 1MW.

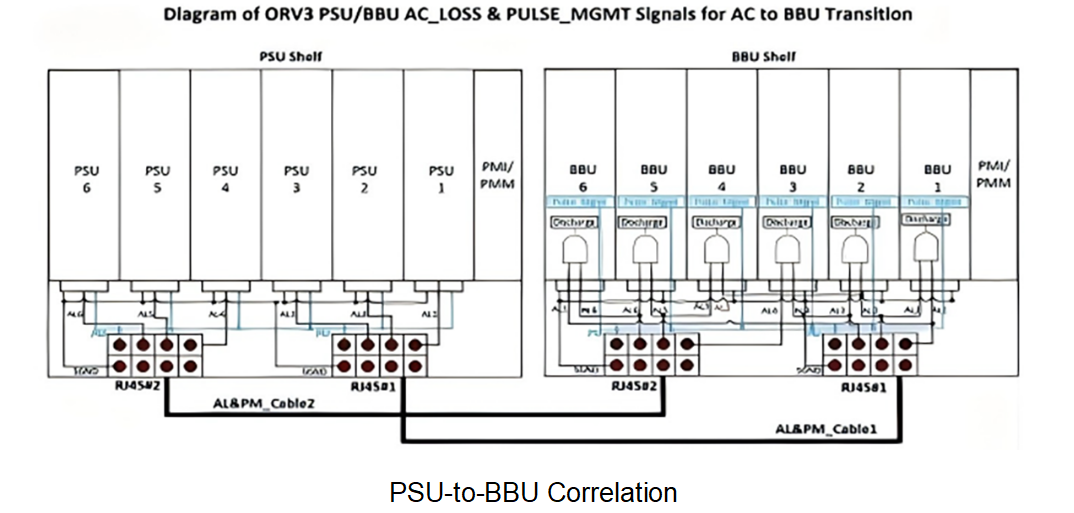

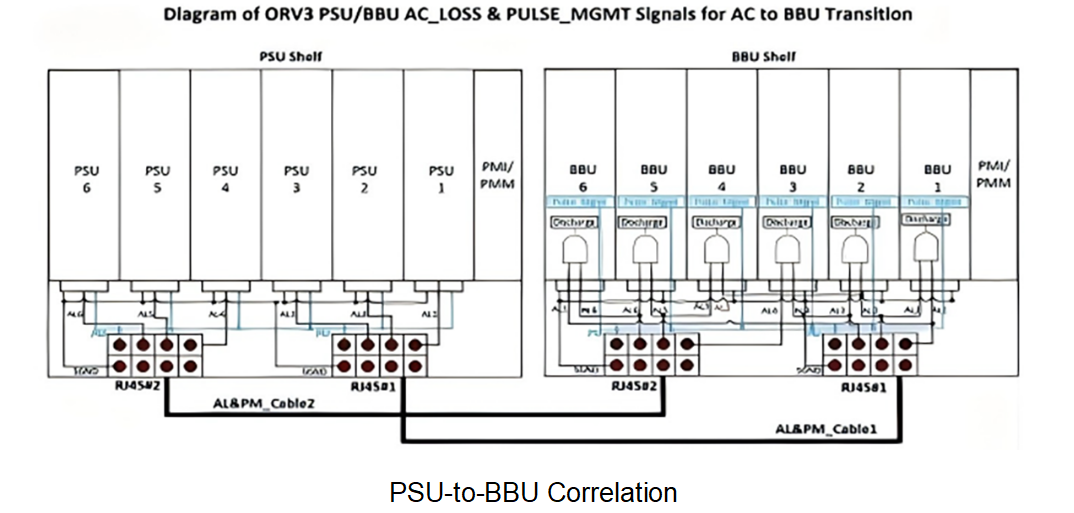

Enhancing System Fault Redundancy through BBU Integration: The Battery Backup Unit (BBU), composed of battery packs, Battery Management System (BMS), charger, and other functional modules, is configured in a one-to-one correspondence with the Power Supply Unit (PSU). When the bus voltage remains below 48.5V for more than 2 milliseconds, the BBU module activates its discharge mode and takes control of the bus voltage within 2ms, providing temporary power support to the server during a power outage.

04

Core Challenges

[1] Extremely High Requirements for Response Speed

Currently, the power consumption of computing chips is witnessing a rapid surge. The power draw of the GB300 NVL72 cabinet is projected to reach 135~140 kW, with GPU chips exhibiting extremely high transient current demands. During AI model training, processor loads can abruptly shift from low-power to high-power states, causing current requirements to spike dramatically within milliseconds. The load variation rate can reach 10~60A/μs. If the power supply's response speed is insufficient to adjust the voltage promptly, it may lead to processor voltage sag, affecting performance and system stability—or even causing hardware damage. This imposes extremely stringent requirements on the response speed of the PSU.

[2] Difficulty in Testing and Reproducing Load Characteristics

Conventional electronic loads struggle to accurately replicate load dynamic changes at levels of several tens of amperes per microsecond. Meanwhile, as PSU power density continues to increase, Power Shelf capacity has now exceeded 33kW per 1U, with rack power long having long surpassed the 100kW mark—representing a breakthrough in power density advancement.

For server power supply manufacturers, how to equip their R&D laboratories and production lines with electronic load equipment that features fast current response, wide power range, and high compatibility has become a new challenge.

[3] Engineering Challenges of Liquid Cooling Technology

Current air-cooling technology has reached its thermal dissipation limit. For AI data centers deploying high-power consumption chips such as the GB300, liquid cooling is not merely an option for reducing PUE; it is the only viable thermal solution. While liquid cooling offers superior heat dissipation efficiency, it introduces challenges such as leakage risks, complex maintenance, difficult retrofitting, and high construction costs.

[4] Technology Gap in Green Energy Integration

The power demand of large-scale AI data centers is growing explosively, necessitating the integration of renewable energy sources. However, the output power fluctuations of photovoltaic and wind power can reach ±30%, leading to bus voltage variations exceeding 10% and compromising server stability. The introduction of supercapacitors or flywheel energy storage as buffering mechanisms is required, albeit at the cost of increased system complexity.

[5] Standardization and Compatibility Dilemmas

The rapid evolution of technology is accompanied by challenges in standardization and compatibility. For instance, PSUs from different manufacturers face connector incompatibility issues (such as the coexistence of OCP ORv3-mandated 12VHPWR interfaces and traditional 8-pin interfaces), requiring data centers to stock multiple cable types while increasing operational costs by 15%.

The challenge of achieving a smooth transition between legacy and new technologies, along with enabling seamless collaboration among different solutions, requires coordinated efforts across the industry chain—including chip manufacturers, power supply vendors, server makers, and data center operators. This challenge must be resolved through the establishment of unified industry standards and specifications.

05

Summary

As the "heart" of computing infrastructure and a "carbon reduction hub," AI server power supplies have evolved from a supporting role to a key point and technological high ground determining the development of the AI industry. In response to the growing testing demands, Kewell will soon launch a dedicated test solution for AI server power supplies—stay tuned!

Position:

Position: